As a seasoned representative of Bestgamingpro.com, I’ve journeyed through the ever-evolving realm of fiber optic technology, understanding the intricate needs of those who traverse this field. In 2024, the quest to unveil the “7 Best Fiber Optic Cleavers” is not just about listing tools; it’s about addressing the nuanced demands of precision, durability, and value that professionals and enthusiasts alike yearn for.

I empathize with the challenges you face – the daunting task of sifting through endless options, the uncertainty of choosing a cleaver that ensures a perfect cut, and the pressure of balancing quality with cost. With over a decade of experience in the telecommunications sector, I have witnessed the evolution of fiber optic cleavers, understanding their critical role in your work’s success.

Our audience, a diverse group comprising telecom professionals, network engineers, and dedicated DIY enthusiasts, seeks more than just a tool. You seek a companion that enhances your efficiency, precision, and reliability in every cut you make. This understanding forms the cornerstone of our rapport with you.

This guide is meticulously crafted, combining expertise and empathy, to lead you to the best fiber optic cleavers of 2024. Each cleaver has been rigorously tested and evaluated against the highest standards of performance. As your guide, I aim to transform your search into a fulfilling journey, ensuring that every choice you make is informed, confident, and tailored to your unique needs in the dynamic world of fiber optics. Welcome to a world where each cleaver is not just a tool, but a gateway to unmatched precision and success.

1. Fiber Cleaver Ftth Fiber Optic Tools High Precision

At Bestgamingpro.com, we proudly place the Fiber Cleaver Ftth at the pinnacle of our “7 Best Fiber Optic Cleavers in 2024” list. This tool is a marvel of precision, designed to cater to the meticulous demands of fiber optic professionals. Its capability to handle a range of coating diameters from 250-900um and a bare fiber diameter of 125um makes it incredibly versatile.

The cleaver’s standout feature is its exceptional cleaving angle accuracy, ranging between 0.5 to 0.8 degrees, ensuring impeccable cuts. Coupled with a robust blade life of 48,000 cleaves, this cleaver is an indispensable tool for achieving flawless results in fiber optic projects. It’s not just a cleaver; it’s a precision instrument that promises reliability and efficiency in every use.

2. Automatic Stability Fiber Optic Cleaver FC-30 Cutter

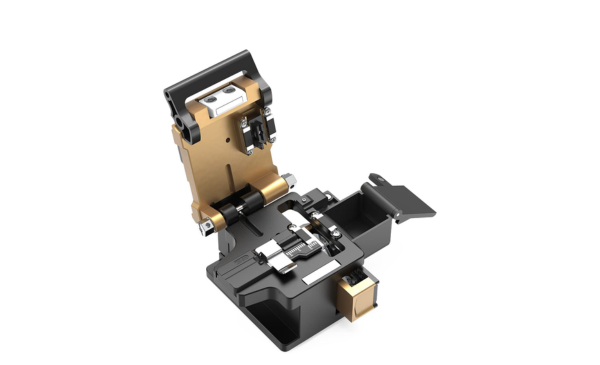

The Automatic Stability Fiber Optic Cleaver FC-30 secures the second spot on our “7 Best Fiber Optic Cleavers in 2024” list at Bestgamingpro.com, and for good reasons. This cutter stands out for its precision and versatility. The tungsten steel blade, known for its wear and corrosion resistance, offers an impressive 48,000 cleaves life. Its cleaving angle of less than 0.5 degrees ensures high-precision cuts.

The FC-30 is uniquely designed with a double fixture, accommodating a wide range of optical fibers from 250μm to 3.0mm. The addition of a high-capacity waste bin and a silicone pressure pad enhances its practicality, making it a must-have for professionals seeking efficiency and reliability in fiber optic cleaving.

3. Signal fire Optical Fiber Cleaver

Claiming the third spot on our “7 Best Fiber Optic Cleavers in 2024” at Bestgamingpro.com is the Signal fire Optical Fiber Cleaver. This tool is a paragon of precision and versatility. Its ability to deliver a cutting angle of less than 0.5° makes it an essential asset for achieving optimal fiber preparation for fusion splicing.

The built-in fiber holder, accommodating a wide range of single fibers, and a readable scale for accurate cleave lengths, enhances its utility in various settings. The cleaver’s design strikes a perfect balance between lightweight portability and rugged durability, making it suitable for field applications. With an impressive blade life of 48,000 cleaves, it’s an indispensable tool for technicians and fiber splicers seeking precision and longevity in their equipment.

4. FYBOPTWU – FTTH Optical Fiber Cleaver Fiber Optic Cleaver

The FYBOPTWU FTTH Optical Fiber Cleaver earns its place at number 4 on our “7 Best Fiber Optic Cleavers in 2024” list, curated by Bestgamingpro.com. This cleaver distinguishes itself with its 3-in-1 functionality and compact design, making it a versatile and portable choice for professionals on the go. Its ability to handle a range of wire diameters, from 0.25mm bare fiber to 3.0mm leather cable, addresses diverse cutting needs.

The upgrade to a metal construction and detachable waste fiber collection box adds to its durability and practicality. The tungsten steel blade, with a remarkable life of up to 50,000 cleaves, ensures consistent, high-precision cuts. This cleaver is more than just a tool; it’s a reliable partner for efficient and precise fiber optic splicing.

5. 12 Ribbon Fiber Optic Cleaver High Precision

Securing the fifth position on our “7 Best Fiber Optic Cleavers in 2024” at Bestgamingpro.com is the 12 Ribbon Fiber Optic Cleaver. This tool is a game-changer for its precision and automation. Designed to handle 1 to 12 ribbon fiber cables, it offers a typical cleave angle of about 0.5 degrees, epitomizing accuracy.

The fully automatic operation simplifies the cleaving process, requiring just one press for a precise cut. Equipped with a durable tungsten steel blade capable of 48,000 cleaves, it guarantees longevity. Its metal body ensures stability under various conditions, and the multi-functional fiber holders add versatility. This cleaver isn’t just a tool; it’s a pinnacle of innovation and efficiency in fiber optic cleaving.

6. Optical Fiber Cleaver HS-30 with Scrap box

At number 6 on our “7 Best Fiber Optic Cleavers in 2024” list at Bestgamingpro.com, we present the Optical Fiber Cleaver HS-30. This tool is a standout for its compatibility with all AFL Telecommunications fusion splicers, making it a versatile choice for various professional settings. Its robust 16-position blade, capable of delivering 48,000 single-fiber cleaves, ensures longevity and consistent performance.

The cleaver also excels in convenience, featuring a built-in waste collector to safely store fiber fragments. This design not only enhances efficiency but also prioritizes workplace safety. The HS-30 isn’t just a cleaving tool; it’s a comprehensive solution designed to streamline your fiber optic cutting tasks with precision and ease.

7. Precision at Its Finest: 12-Position Fiber Optic Cleaver

Rounding out our “7 Best Fiber Optic Cleavers in 2024” at Bestgamingpro.com is the remarkable 12-Position Fiber Optic Cleaver. This tool is a symbol of precision and reliability. Its ability to deliver clean, precise cuts reduces signal loss significantly, ensuring peak performance in network connections. The ergonomic design and effortless operation make it accessible to both professionals and novices in fiber optic work.

Versatility is another key feature, as it is compatible with a variety of fiber types and diameters, making it suitable for a wide range of applications. The robust construction promises durability in demanding environments.

Additionally, the 12-position cartridge enhances efficiency, cutting down on blade replacement frequency. Its compactness adds to its appeal, offering portability for field technicians. This cleaver is not just a tool; it’s a comprehensive solution for achieving seamless connections and flawless results in fiber optic installations.

Tech specialist. Social media guru. Evil problem solver. Total writer. Web enthusiast. Internet nerd. Passionate gamer. Twitter buff.