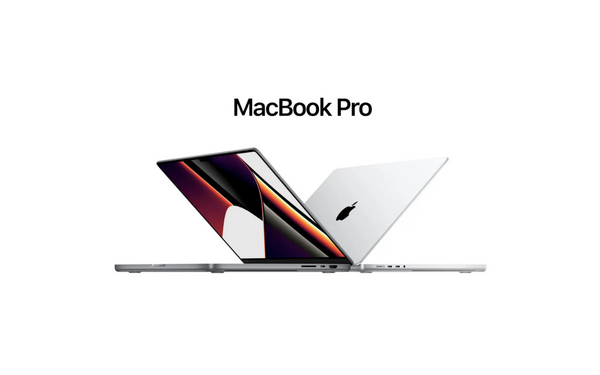

Several people are speculating that Apple will announce two new MacBook Pro models featuring M2 Pro and M2 Max CPUs later today.

The first bit of information to go out is a registration for a forthcoming MacBook Pro and its Wi-Fi 6E capability in a Canadian database (Industry Canada Radio Equipment List) (as flagged by Wade Penner on Twitter).

New Apple MacBook Pro model A2779 seen in Industry Canada Radio Equipment List database. Approved on January 11, 2023. Likely the new M2 Max or M2 Pro. Device will support WiFi 6E / 6GHz band. pic.twitter.com/KmSo1aGp7GJanuary 16, 2023

We are unfamiliar with this source, but MacRumors has investigated and found that the file does indeed exist (but we should still obviously add a whole heap of seasoning to any leak).

Second, there has been independent rumour from reputable sources (like Apple leaker Jon Prosser) that Apple will be unveiling a new product later today.

We have no idea what this product may be; all we’ve been informed is that it will be announced via the Apple media through a simple press release (as opposed to any kind of launch event).

By combining the leak with the rumour, we arrive at the hypothesis that the MacBook Pros will be unveiled later today.

It’s possible that the new MacBook Pros may disappoint

There have been persistent rumours that Apple would release new 14- and 16-inch MacBook Pros in 2023. (actually, they were first expected to debut late in 2022). Our most recent information is that the release of these MacBook Pros may be delayed until the second quarter of 2023 rather than the first.

That’s not a given, of course, since nothing here is guaranteed, but based on everything said up until now, we shouldn’t expect MacBook Pros to show very soon, if at all.

The discovery of a document doesn’t always portend a resolution to the situation, though. And maybe Apple is planning a reveal now, but the on-sale date may be moved some distance down the line. Either way, it’s a good old signal that we don’t have that long to wait.

Still, we’re not quite persuaded by that, and another product may very well be shown today. Another highly anticipated product that might be introduced is a new Mac mini.

If press releases are really used to reveal the MacBook Pro 14-inch and 16-inch to the public, it would confirm a second theme that has been given by the rumour mill as of late: that the laptops have undergone very few modifications. Mark Gurman, another major Apple leaker, recently claimed that these portables would retain the same design and features as existing models, with the M2 Pro and Max chips being the only real upgrade; however, any improvement in performance is likely to be “marginal,” a word that won’t get anyone excited.

If that’s the case, then a quiet rollout through press releases makes the most sense. Predicting the outcome of this debate is difficult, since there are valid reasons for and against the return of the MacBook Pro. We won’t know whether an announcement is coming today or what it is for sure until very soon. There is a lot of interest in Apple’s press conference.

Subtly charming pop culture geek. Amateur analyst. Freelance tv buff. Coffee lover